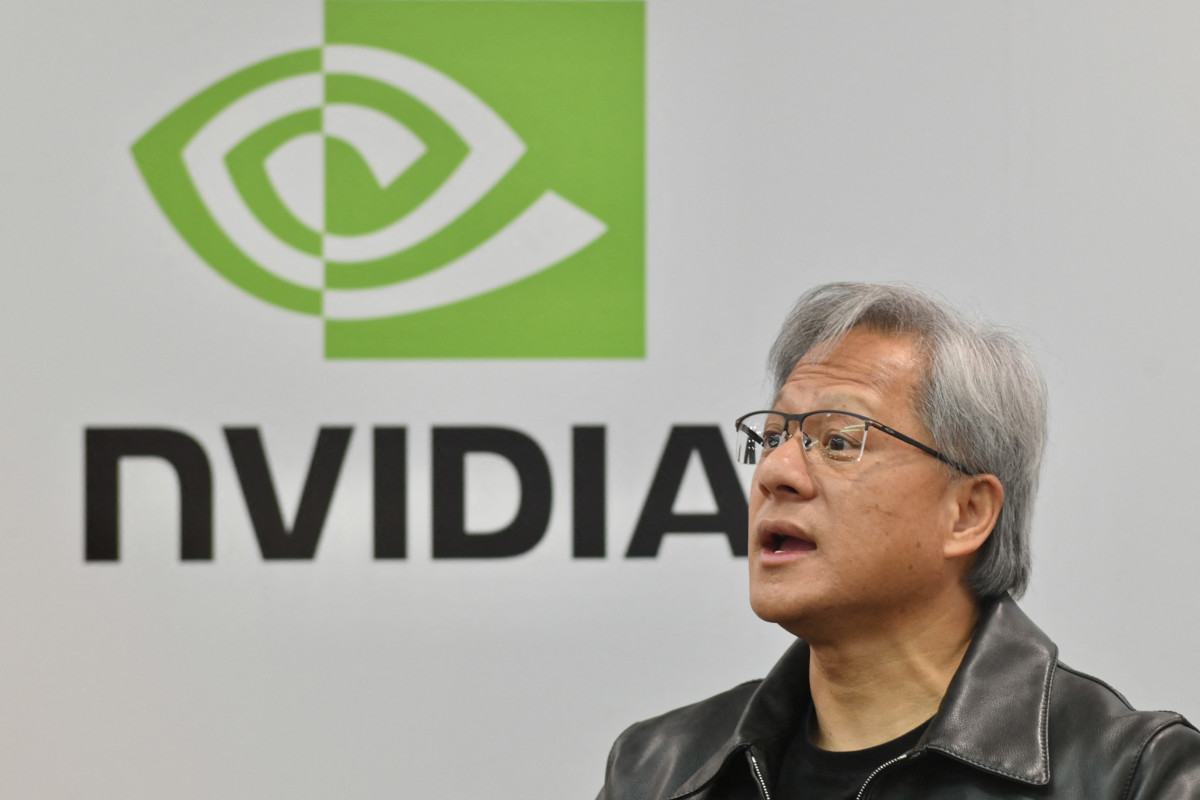

DeepSeek selloff shows AI's future might not need Nvidia

Here's why the DeepSeek triggered tech selloff may have been justified.

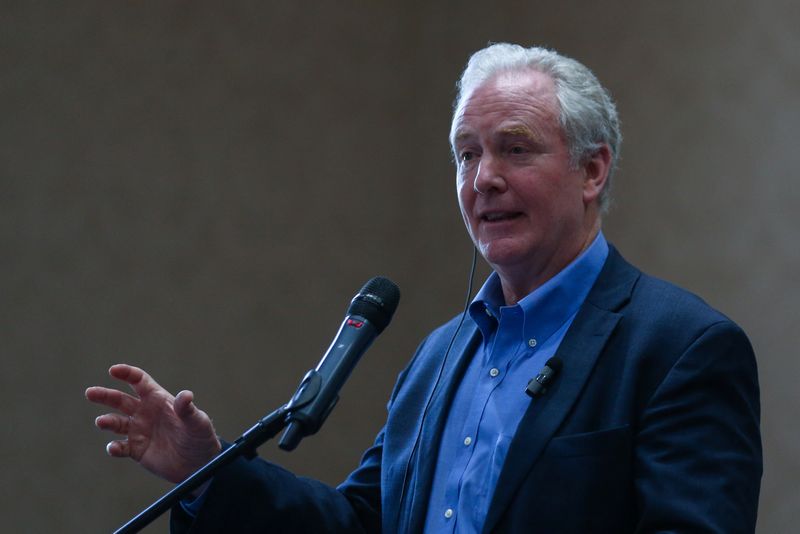

Nvidia (NVDA) stock took a hit earlier this year after Chinese startup DeepSeek unveiled a low-cost AI chatbot, threatening its dominance. Kim Forrest, Chief Investment Officer, Bokeh Capital Partners, joined The Street to discuss why this selloff may have been warranted.

Related: Nvidia's 78% sales growth is impressive, but unsustainable

Full Video Transcript Below:

CONWAY GITTENS: But now that we have Nvidia's (NVDA) results and a pretty healthy outlook for the current quarter, how does the DeepSeek selloff that hit the tech sector? Look, in hindsight, especially given your idea that we might have too many AI models, you know, out there, do you think the selloff was warranted or overblown?

KIM FORREST: I think it was warranted. And for this main reason, OpenAI has been incredibly successful in building a large language model. I'm not going to nerd out and tell you all the intricacies that I find so fascinating, but they've done an excellent job. But the way they've done this is they've trained a whole bunch of neural networks and then combined them, and they do it through what I would consider a brute force method. They make it read the internet. OK so that brute force method called for tons and tons and tons of, you know, processors to go out there and read the internet over and over to train. That's how neural networks do things. They look at things millions, billions, trillions of times, and they're creating, you know, data from this that they can then retrieve and use. All right.

So DeepSeek showed people, investors that there might be other ways to train. And I think that's key, that we may not need the volumes that OpenAI has been using, and that other techniques have to be created by computer scientists to be able to better train these networks. And I think that's really the conundrum. How long is this going to take? The brute force method doesn't seem to be returning the gains that it had. Because if you look at OpenAI, they keep saying they're going to come out with the next model that's going to be, you know, logarithmically better. And yet they're about 18 months behind. And I think it's because the old way of just shoving tons of data and letting the neural network chew on it isn't working. So DeepSeek has shown us there are other ways that we should be looking at it, and it doesn't involve vast amounts of data centers.