Want to Unlock the True Potential of Google's Gemini AI? This Guide Will Show You How!

Large language models (LLMs) like Gemini are revolutionizing how we interact with and utilize information. These models can perform various tasks, from generating creative text formats to answering your burning questions. But to truly harness their power, it's essential to understand the different methods for interacting with them and when to use each. This article delves into three primary methods for using Gemini: Retrieval Augmented Generation (RAG) Fine-tuning Other unique approaches We'll explore their differences, strengths, and weaknesses, providing a comprehensive guide to help you maximize your experience with Gemini. Retrieval Augmented Generation (RAG) Imagine an "open-book exam" where the LLM can access external information to answer questions more accurately, instead of relying solely on its internal knowledge ("closed-book exam"). How RAG Works with Gemini RAG enhances the accuracy and relevance of LLM responses by incorporating external data sources. Here's how it works: Gather External Data Sources – Information is collected from web pages, knowledge bases, and databases. Process and Store Data – The data is processed and organized using vector databases and embeddings. User Query – The user submits a query. Similarity Search – The system searches for the most relevant data. Data Retrieval – The retrieved data is incorporated into Gemini’s instructions. Response Generation – Gemini generates a response based on both its internal knowledge and the external data. Benefits of Using RAG with Gemini Access to Fresh Information – Overcomes the limitation of outdated training data. Factual Grounding – Reduces hallucinations by ensuring responses are based on verifiable facts. Domain-Specific Responses – Allows for tailoring Gemini's responses using specialized knowledge bases. Cost-Effectiveness – More affordable than fine-tuning since it doesn’t require retraining the model. Enhanced Transparency – Provides users with insight into data sources for better verification. Privacy Protection – Allows sensitive data to remain local while still leveraging LLM capabilities. Examples of RAG with Gemini AI Chatbots – Customer support bots retrieving real-time data from company knowledge bases. Financial Forecasting – Analyzing real-time market data and financial records. Medical Information Systems – Providing up-to-date clinical guidelines and research papers. Fine-Tuning Fine-tuning is like giving an LLM specialized training. You take a pre-trained Gemini model and further train it on a smaller, domain-specific dataset to refine its capabilities. How Fine-Tuning Works with Gemini Prepare the Dataset – Gather and format the training data. Choose a Fine-Tuning Method – Options include instruction tuning, RLHF, adapter-based tuning, etc. Fine-Tune the Model – Update Gemini’s parameters based on the dataset. Evaluate the Model – Test its accuracy and performance on a separate dataset. Benefits of Fine-Tuning Gemini Enhanced Performance – Improves accuracy on specialized tasks. Domain Adaptation – Makes Gemini better suited for specific industries. Customization – Adjusts response style, format, and structure. Alignment – Ensures the model follows predefined guidelines. Examples of Fine-Tuning Gemini Question Answering – Improves accuracy in answering domain-specific queries. Text Summarization – Enhances Gemini's ability to generate high-quality summaries. Masking PII Data – Helps in automatically detecting and hiding personal information. ⚠️ Fine-tuning is not ideal for real-time or frequently updated information. In such cases, RAG is a better approach. Other Methods for Using Gemini Enhancing Interaction Prompt Engineering – Crafting effective prompts to guide Gemini’s behavior. In-Context Learning – Providing examples in the prompt for better responses. Expanding Functionality Function Calling – Allows Gemini to interact with external systems and APIs. Multimodal Capabilities – Supports text, images, and audio for richer interactions. Extensions – Connects Gemini with Google apps (e.g., Gmail, Drive, Maps, YouTube). When to Use Each Method Method Description Use Cases RAG Incorporates external data sources Ideal for up-to-date, fact-based responses (e.g., AI chatbots, financial forecasting, healthcare) Fine-Tuning Trains the model on a custom dataset Best for improving accuracy in specific tasks (e.g., summarization, privacy protection) Prompt Engineering Crafts effective prompts Great for quick, flexible control of outputs In-Context Learning Provides examples in prompts Helps guide the model’s understanding with a few examples Function Calling Connects to APIs & external systems Useful for automation and system integration Multimod

Large language models (LLMs) like Gemini are revolutionizing how we interact with and utilize information. These models can perform various tasks, from generating creative text formats to answering your burning questions.

But to truly harness their power, it's essential to understand the different methods for interacting with them and when to use each. This article delves into three primary methods for using Gemini:

- Retrieval Augmented Generation (RAG)

- Fine-tuning

- Other unique approaches

We'll explore their differences, strengths, and weaknesses, providing a comprehensive guide to help you maximize your experience with Gemini.

Retrieval Augmented Generation (RAG)

Imagine an "open-book exam" where the LLM can access external information to answer questions more accurately, instead of relying solely on its internal knowledge ("closed-book exam").

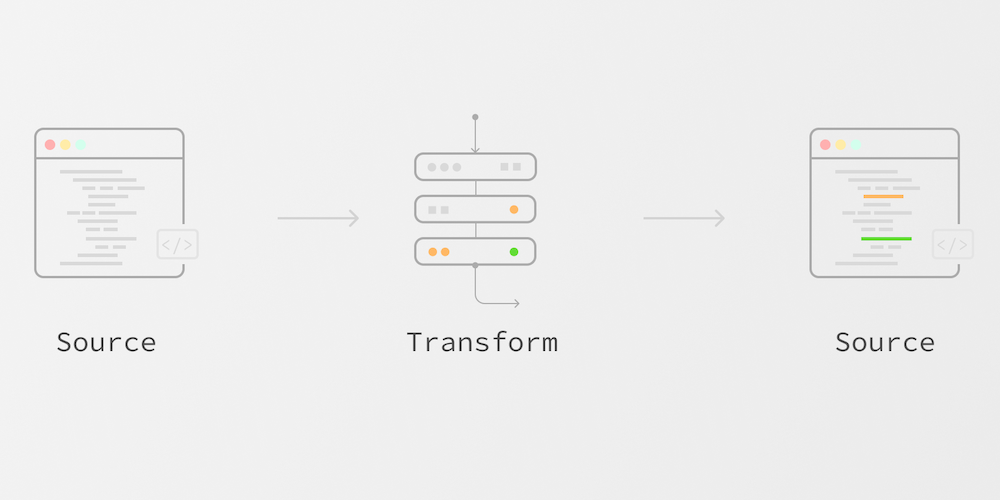

How RAG Works with Gemini

RAG enhances the accuracy and relevance of LLM responses by incorporating external data sources. Here's how it works:

- Gather External Data Sources – Information is collected from web pages, knowledge bases, and databases.

- Process and Store Data – The data is processed and organized using vector databases and embeddings.

- User Query – The user submits a query.

- Similarity Search – The system searches for the most relevant data.

- Data Retrieval – The retrieved data is incorporated into Gemini’s instructions.

- Response Generation – Gemini generates a response based on both its internal knowledge and the external data.

Benefits of Using RAG with Gemini

- Access to Fresh Information – Overcomes the limitation of outdated training data.

- Factual Grounding – Reduces hallucinations by ensuring responses are based on verifiable facts.

- Domain-Specific Responses – Allows for tailoring Gemini's responses using specialized knowledge bases.

- Cost-Effectiveness – More affordable than fine-tuning since it doesn’t require retraining the model.

- Enhanced Transparency – Provides users with insight into data sources for better verification.

- Privacy Protection – Allows sensitive data to remain local while still leveraging LLM capabilities.

Examples of RAG with Gemini

- AI Chatbots – Customer support bots retrieving real-time data from company knowledge bases.

- Financial Forecasting – Analyzing real-time market data and financial records.

- Medical Information Systems – Providing up-to-date clinical guidelines and research papers.

Fine-Tuning

Fine-tuning is like giving an LLM specialized training. You take a pre-trained Gemini model and further train it on a smaller, domain-specific dataset to refine its capabilities.

How Fine-Tuning Works with Gemini

- Prepare the Dataset – Gather and format the training data.

- Choose a Fine-Tuning Method – Options include instruction tuning, RLHF, adapter-based tuning, etc.

- Fine-Tune the Model – Update Gemini’s parameters based on the dataset.

- Evaluate the Model – Test its accuracy and performance on a separate dataset.

Benefits of Fine-Tuning Gemini

- Enhanced Performance – Improves accuracy on specialized tasks.

- Domain Adaptation – Makes Gemini better suited for specific industries.

- Customization – Adjusts response style, format, and structure.

- Alignment – Ensures the model follows predefined guidelines.

Examples of Fine-Tuning Gemini

- Question Answering – Improves accuracy in answering domain-specific queries.

- Text Summarization – Enhances Gemini's ability to generate high-quality summaries.

- Masking PII Data – Helps in automatically detecting and hiding personal information.

⚠️ Fine-tuning is not ideal for real-time or frequently updated information. In such cases, RAG is a better approach.

Other Methods for Using Gemini

Enhancing Interaction

- Prompt Engineering – Crafting effective prompts to guide Gemini’s behavior.

- In-Context Learning – Providing examples in the prompt for better responses.

Expanding Functionality

- Function Calling – Allows Gemini to interact with external systems and APIs.

- Multimodal Capabilities – Supports text, images, and audio for richer interactions.

- Extensions – Connects Gemini with Google apps (e.g., Gmail, Drive, Maps, YouTube).

When to Use Each Method

| Method | Description | Use Cases |

|---|---|---|

| RAG | Incorporates external data sources | Ideal for up-to-date, fact-based responses (e.g., AI chatbots, financial forecasting, healthcare) |

| Fine-Tuning | Trains the model on a custom dataset | Best for improving accuracy in specific tasks (e.g., summarization, privacy protection) |

| Prompt Engineering | Crafts effective prompts | Great for quick, flexible control of outputs |

| In-Context Learning | Provides examples in prompts | Helps guide the model’s understanding with a few examples |

| Function Calling | Connects to APIs & external systems | Useful for automation and system integration |

| Multimodal Capabilities | Processes text, images, audio | Great for analyzing diverse data formats |

| Extensions | Connects to Google apps | Ideal for retrieving real-time information from Google services |

Often, a combination of these methods yields the best results.

For example:

- Use RAG to provide up-to-date information.

- Fine-tune for domain-specific improvements.

- Use prompt engineering for output refinement.

- Function calling to integrate external services.

Conclusion

Gemini, with its diverse capabilities and interaction methods, is a powerful tool for various applications.

Understanding the differences between RAG, fine-tuning, and other techniques will help you maximize Gemini's potential—whether for creative content generation, automation, or data analysis.

.png)

![‘Companion’ Ending Breakdown: Director Drew Hancock Tells All About the Film’s Showdown and Potential Sequel: ‘That’s the Future I Want for [Spoiler]’](https://variety.com/wp-content/uploads/2025/02/MCDCOMP_WB028.jpg?#)