The DeepSeek Series: A Technical Overview

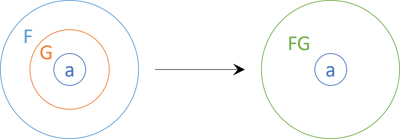

The appearance of DeepSeek Large-Language Models has caused a lot of discussion and angst since their latest versions appeared at the beginning of 2025. But much of the value of DeepSeek's work comes from the papers they have published over the last year. Shayan Mohanty provides an overview of these papers, highlighting three main arcs in this research: a focus on improving cost and memory efficiency, the use of HPC Co-Design to train large models on limited hardware, and the development of emergent reasoning from large-scale reinforcement learning more…

The appearance of DeepSeek Large-Language Models has caused a lot of discussion and angst since their latest versions appeared at the beginning of 2025. But much of the value of DeepSeek's work comes from the papers they have published over the last year. Shayan Mohanty provides an overview of these papers, highlighting three main arcs in this research: a focus on improving cost and memory efficiency, the use of HPC Co-Design to train large models on limited hardware, and the development of emergent reasoning from large-scale reinforcement learning