OpenAI ex-chief scientist planned for a doomsday bunker for the day when machines become smarter than man

Sutskever believed his AI researchers needed protection once they ultimately achieved their goal of creating artificial general intelligence, or AGI.

- Months before he left OpenAI, Sutskever believed his AI researchers needed to be assured protection once they ultimately achieved their goal of creating artificial general intelligence, or AGI. “Of course, it’s going to be optional whether you want to get into the bunker,” he told his team

If there is one thing that Ilya Sutskever knows, it is the opportunities—and risks—that stem from the advent of artificial intelligence.

An AI safety researcher and one of the top minds in the field, he served for years as the chief scientist of OpenAI. There he had the explicit goal of creating deep learning neural networks so advanced they would one day be able to think and reason just as well as, if not better than, any human.

Artificial general intelligence, or simply AGI, is the official term for that goal. It remains the holy grail for researchers to this day—a chance for mankind at last to give birth to its own sentient lifeform, even if it’s only silicon and not carbon based.

But while the rest of humanity debates the pros and cons of a technology even experts struggle to truly grasp, Sutskever was already planning for the day his team would finish first in the race to develop AGI.

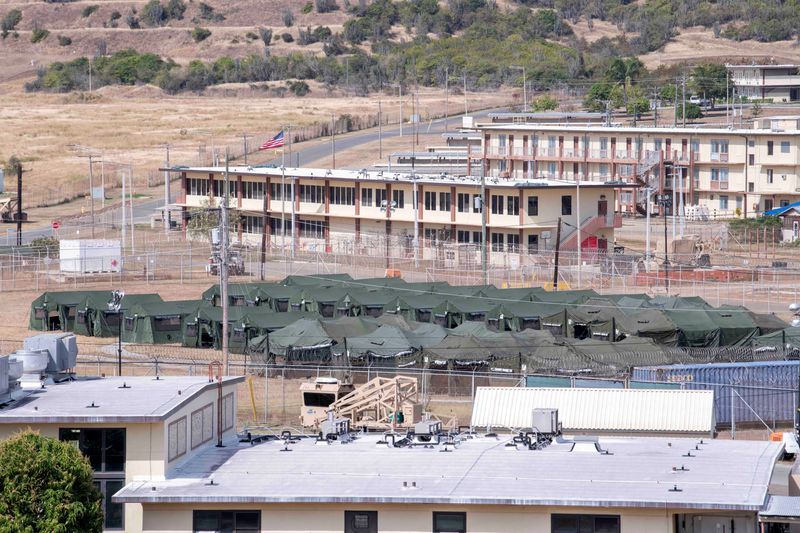

According to excerpts published by The Atlantic from a new book called Empire of AI, part of those plans included a doomsday shelter for OpenAI researchers.

“We’re definitely going to build a bunker before we release AGI,” Sutskever told his team in 2023, months before he would ultimately leave the company.

Sutskever reasoned his fellow scientists would require protection at that point, since the technology was too powerful for it not to become an object of intense desire for governments globally.

“Of course, it’s going to be optional whether you want to get into the bunker,” he assured fellow OpenAI scientists, according to people present at the time.

Mistakes lead to swift return of Sam Altman

Written by former Wall Street Journal correspondent Karen Hao and based on dozens of interviews with some 90 current and former company employees either directly involved or with knowledge of events, Empire of AI reveals new information regarding the brief but spectacular coup led to oust of Sam Altman as CEO in November 2023 and what it meant for the company behind ChatGPT.

The book pins much of the responsibility on Sutskever and chief technology officer Mira Murati, whose concerns over Altman’s alleged fixation on paying the bills at the cost of transparency were at the heart of the non-profit board sacking him.

The duo apparently acted too slowly, failing to consolidate their power and win key executives over to their cause. Within a week Altman was back in the saddle and soon almost the entire board of the non-profit would be replaced. Sutskever and Murati both left within the span of less than a year.

Neither OpenAI nor Sutskever responded to a request for comment from Fortune out of normal hours. Ms. Murati could not be reached.

‘Building AGI will bring about a rapture’

Sutskever knows better than most what the awesome capabilities of AI are. Together with renowned researcher and mentor Geoff Hinton, he was part of an elite trio behind the 2012 creation of AlexNet, often dubbed by experts as the Big Bang of AI.

Recruited by Elon Musk personally to join OpenAI three years later, he would go on to lead its efforts to develop AGI.

But the launch of its ChatGPT bot accidentally derailed his plans by unleashing a funding gold rush the safety-minded Sutskever could no longer control and that played into rival Sam Altman’s hands, the excerpts state. Much of this has been reported on by Fortune, as well.

Ultimately it lead to the fateful confrontation with Altman and Sutskever’s subsequent departure, along with many likeminded OpenAI safety experts aligned with him who worried the company no longer sufficiently cared about aligning AI with the interests of mankind at large.

“There is a group of people—Ilya being one of them—who believe that building AGI will bring about a rapture,” said one researcher Hao quotes, who was present at the time Sustekever revealed his plans for a bunker. “Literally a rapture.”

This story was originally featured on Fortune.com